Python for

Scientific Computing

a.k.a. Python compilers for the masses

Proudly made in Namek by serge-sans-paille

/me

Serge « sans paille » Guelton

$ whoami

sguelton- R&D engineer at QuarksLab on compilation for security

- Associate researcher at Télécom Bretagne

- Core dev of the Open Source Pythran compiler for Numerical Python

Python for Science

- Portable, scriptable language

- Clean (?) syntax

- Rich ecosystem

- Enthusiast community, esp. in science

- Base Array Package, native data structure

- Exposes C routines

- linear algebra, Fourier transform and random number

- Stats

- Integration, Interpolate, Regression,

- Signal Processing

- Image Processing

- Clustering

- Sparse linear algebra...

Plotting for the Mass!

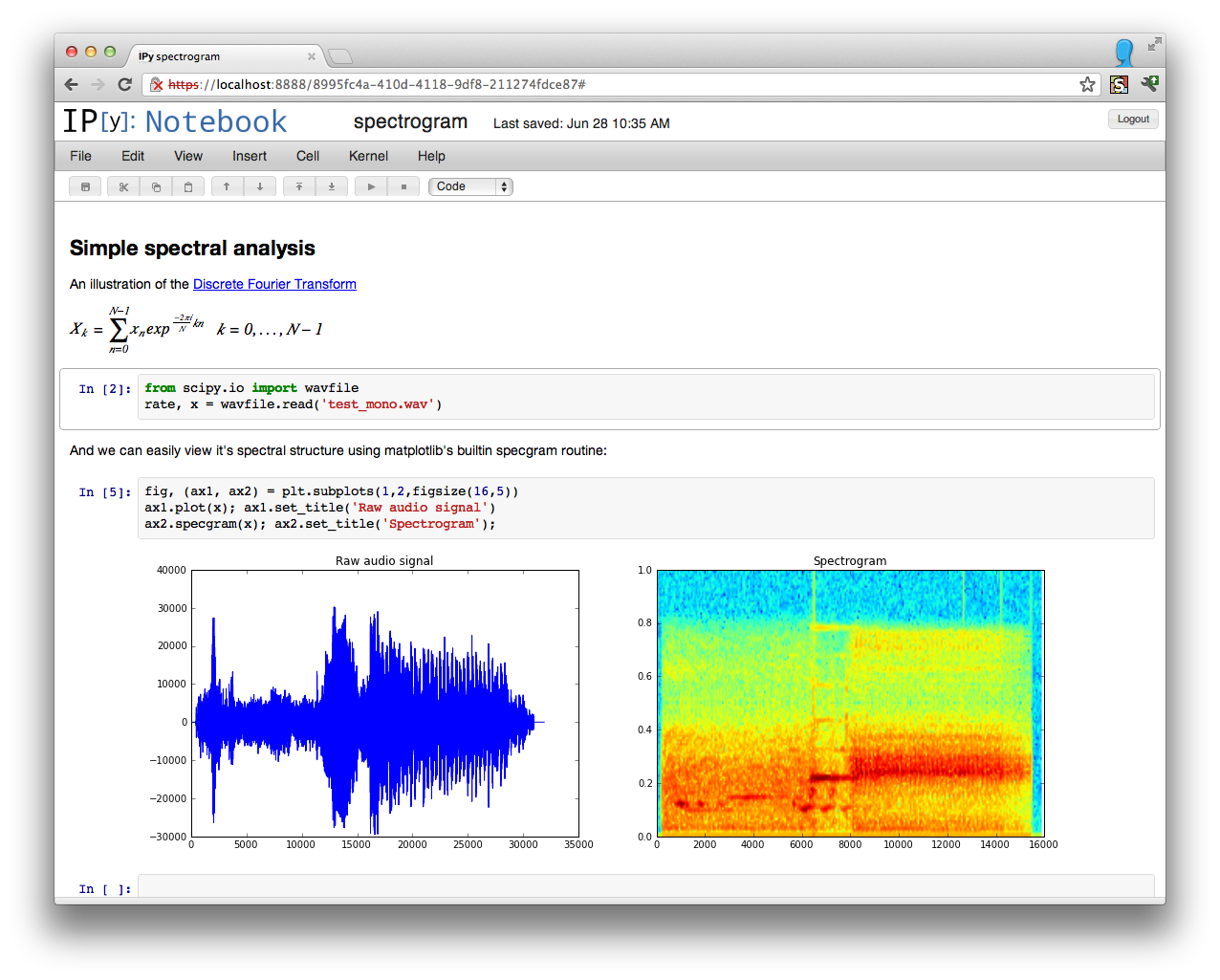

- Interactive shell with completion, history, magics...

- Notebook kernel for reproducible science

- Provides tools for parallel computing

And Many Others

- Symbolic mathematics with SymPy

- Data structure and analysis with pandas

- Machine learning with sckit-learn

- ...

What about Performance?

Myth #1: Python is slow

A language is not slow, but it can make it hard for compilers to make it run fast

Myth #2: Python is too dynamic for HPC

Scientific code written in Python looks like FORTRAN

function rosen(x, n)

real(8) :: x(n),t0(n-1), t1(n-1)

real(8) rosen

t0 = 100 * (x(2:n) - (x(1:n-1)** 2))** 2

t1 = (1 - x(1:n-1)** 2)

rosen = sum(t0 + t1)

end functionimport numpy as np

def rosen(x):

t0 = 100 * (x[1:] - x[:-1] ** 2) ** 2

t1 = (1 - x[:-1]) ** 2

return np.sum(t0 + t1)

Myth #3: NumPy is fast

Its design suffers from several critical drawbacks

- Spawns many intermediate array

- Very slow indexing (but relatively fast vector operations)

- No parallelization

- No vectorization

Uses the BLAS/MKL for linear algebra though

Here comes compilers

for Python/Numpy codes

Turn annotated code into C extension

- C with a Python look and feel

- Performance close to C

- Explicit Parallelism

- Can call C code

- Loose Backward compatibility

cdef inline int mandel(double real, double imag, int max_iterations=20):

cdef double z_real = 0., z_imag = 0.

cdef int i

for i in range(0, max_iterations):

z_real, z_imag = ( z_real*z_real - z_imag*z_imag + real,

2*z_real*z_imag + imag )

if (z_real*z_real + z_imag*z_imag) >= 4:

return i

return -1PyPy

- Just-In-Time compiler

- Targets full language support

- Limited native module support (incl. Numpy)

- Successful Numpypy project

def mandel(real, imag, max_iterations=20):

z_real, z_imag = 0., 0.

for i in range(0, max_iterations):

z_real, z_imag = ( z_real*z_real - z_imag*z_imag + real,

2*z_real*z_imag + imag )

if (z_real*z_real + z_imag*z_imag) >= 4:

return i

return -1Numba

- Just-In-Time compiler

- Strong industrial support

- GPGPU backend

- Works best on explicit loops

- Based on LLVM

from numba import autojit

@autojit

def mandel(real, imag, max_iterations=20):

z_real, z_imag = 0., 0.

for i in range(0, max_iterations):

z_real, z_imag = ( z_real*z_real - z_imag*z_imag + real,

2*z_real*z_imag + imag )

if (z_real*z_real + z_imag*z_imag) >= 4:

return i

return -1

- Just-In-Time compiler

- Supports a limited subset of Python, focused on Numpy

- Represents Numpy functions in terms a few skeletons

- Very efficient and easy to install

- Implicit parallelism (OpenMP | GPGPU)

from parakeet import jit

@jit

def mandel(real, imag, max_iterations=20):

z_real, z_imag = 0., 0.

for i in range(0, max_iterations):

z_real, z_imag = ( z_real*z_real - z_imag*z_imag + real,

2*z_real*z_imag + imag )

if (z_real*z_real + z_imag*z_imag) >= 4:

return i

return -1Pythran

- Ahead-Of-Time compiler

- Turn whole Python modules into native modules

- Supports a large subset of Python, including Exceptions, generators, named parameters...

- Requires exported function type annotation as comments

#pythran export mandel(float, float, int)

def mandel(real, imag, max_iterations=20):

z_real, z_imag = 0., 0.

for i in range(0, max_iterations):

z_real, z_imag = ( z_real*z_real - z_imag*z_imag + real,

2*z_real*z_imag + imag )

if (z_real*z_real + z_imag*z_imag) >= 4:

return i

return -1How do they Compare?

http://www.cs.sfu.ca/.../ggbaker

| Language | Implementation | Speedup |

|---|---|---|

| Python | CPython 2.7.6 | 1.0 |

| Python | CPython 2.7.6 with -O | 1.0 |

| Cython | Cython 0.20.1post0 | 1.6 |

| Annotated Cython | Cython 0.20.1post0 | 95.8 |

| Python3 | CPython 3.4.0 | 0.9 |

| Python | Jython 2.5.3 | 3.4 |

| Python | PyPy 2.3.1 | 82.8 |

| Python | Parakeet 0.23.2 | 68.8 |

| Python | Pythran 0.5.0 | 92.7 |

| C | GCC 4.8.2 with -O2 | 103.3 |

We don't need no loops

Python/Numpy == high level programming

reliab = zeros((N,N))

for i in range(N):

for j in range(N):

if random() < 0.6:

reliab[i,j] = 1

else:

reliab[i,j] = 0reliab = numpy.int32(numpy.random.rand(N,N) < 0.6)Crowd-sourced benchs

def cronbach(itemscores):

itemvars = itemscores.var(axis=1, ddof=1)

tscores = itemscores.sum(axis=0)

nitems = len(itemscores)

return nitems / (nitems-1) * (1 - itemvars.sum() / tscores.var(ddof=1))- Python, (num)PyPy, Numba, Parakeet, Pythran

- Cython not considered (not a Python compiler!)

Optimization Opportunities

def l2norm(x):

return np.sqrt(np.sum(np.abs(x) ** 2, axis=1))- Avoid temporary allocations from

np.abs(x)**2 - Do not rely on Numpy's

np.sum(..., axis=1) - Parallelize the

np.sum(..., axis=1) - Vectorize the

np.abs(x) ** 2

The Pythran way

#pythran export l2norm(float32[][])

def l2norm(x):

return np.sqrt(np.sum(np.abs(x)**2, axis=1))$> pythran l2norm.py -march=native -fopenmpRemove Temporaries

Loop Approach

Inlining + Loop fusion + scalarization + use-def elimination

->Expression Approach<-

Forward Substitution + Expression Templates

Loop Unrolling

A generic approach to loop unrolling

for i in range(3):

foo(i)(Forward Substitution +) Constant Folding

for i in [0, 1, 2]:

foo(i)Loop Full Unrolling

i = 0

foo(i)

i = 1

foo(i)

i = 2

foo(i)Use-def elimination...

Vectorization

Use SSE or AVX vector registers of modern CPUs

Pythran's runtime is partially vectorized thanks to Boost.SIMD

Vectorization Effect

Chef's Selection

| bench | seq (µs) | SSE | AVX |

|---|---|---|---|

| allpairs_distances | 1711 | 916 | 673 |

| arc_distance | 1020 | 613 | 414 |

| l2norm | 913 | 577 | 494 |

| rosen | 1234 | 1096 | 997 |

| slowparts | 1154 | 1176 | 848 |

| vibr_energy | 1518 | 769 | 570 |

Explicit Parallelization

OpenMP for Python

def hyantes(xmin, ymin, xmax, ymax, step, range_, range_x, range_y, t):

X,Y = t.shape

pt = np.zeros((X,Y))

#omp parallel for

for i in range(X):

for j in range(Y):

for k in t:

tmp = 6368.* np.arccos( np.cos(xmin+step*i)*np.cos( k[0] ) * np.cos((ymin+step*j)-k[1])+ np.sin(xmin+step*i)*np.sin(k[0]))

if tmp < range_:

pt[i,j]+=k[2] / (1+tmp)

return pt

Implicit Parallelization

Point-to-point operations

a = np.random.rand(n, m)

b = np.random.rand(3, n - 1)

c = a[1:,:-1] + 3 * b[i]Reductions

a = np.random.rand(n, m)

b = np.random.rand(n, m)

c = np.sum(a ** 2 + b ** 2)Performance Comparison

| Python | parakeet | pythran | -march=native | |

|---|---|---|---|---|

| allpairs_distances | 38129 | 1784 | 1713 | 667 |

| allpairs_distances_loops | 49750 | 1794 | 1681 | 1654 |

| conv | 1916161 | 2040 | 1879 | 1880 |

| growcut | 1967622 | 2203 | 5976 | 5841 |

| harris | 5595 | 5246 | 2949 | 2863 |

| hasting | 8 | 57 | 1 | 1 |

| hyantes | 255760 | 1882 | 1881 | 1820 |

| julia | 2761543 | 2938 | 2578 | 2504 |

| l2norm | 5483 | 12648 | 919 | 494 |

| multiple_sum | 3026 | 8394 | 1245 | 1029 |

| pairwise | 3742614 | 3708 | 3563 | 3507 |

| rosen | 14168 | 1625 | 1238 | 992 |

eDSL Toolbox

- Pythran is an embedded DSL

- Provides a toolbox for other eDSLs

- Language lowering (tuple unpacking, nested functions, named parameters...)

- Code Analyses (closure, aliasing, dependencies...)

Academic Results

- Pythran: Enabling Static Optimization of Scientific Python Programs, S. Guelton, P. Brunet et al. in CSD, under review

- Exploring the Vectorization of Python Constructs Using Pythran and Boost SIMD, S. Guelton, J. Falcou and P. Brunet, in WPMVP, 2014

- Compiling Python modules to native parallel modules using Pythran and OpenMP Annotations, S. Guelton, P. Brunet and M. Amini, in PyHPC, 2013

- Pythran: Enabling Static Optimization of Scientific Python Programs, S. Guelton, P. Brunet et al. in SciPy, 2013

Powered by Strong Engineering

Preprequisite for reproductible science

- 2773 test cases, incl. unit testing, doctest, Continuous integration

- Peer-reviewed code

- Python2.7 and C++11

- User and Developer doc: http://pythonhosted.org/pythran/

- Hosted on https://github.com/serge-sans-paille/pythran

- Releases on PyPi:

$ pip install pythran - Custom Debian repo:

$ apt-get install pythran

THE END

- AUTHORS

- Serge Guelton, Pierrick Brunet, Mehdi Amini, Adrien Merlini, Alan Raynaud, Eliott Coyac...

- FINANCIAL SUPPORT

- Silkan, -->You<--

- CONTRIBUTE

- #pythran on FreeNode, pythran@freelists.org, GitHub repo